Product Item: Onnx azure new arrivals

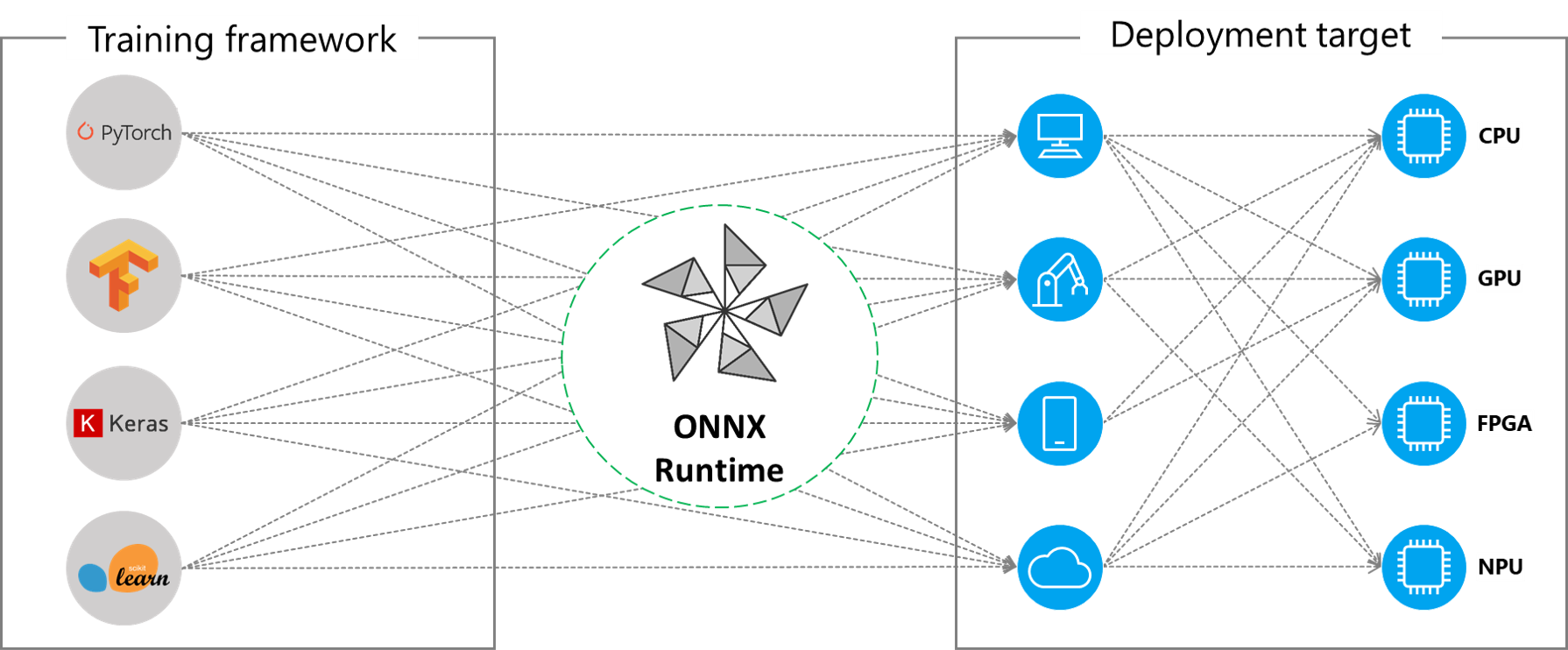

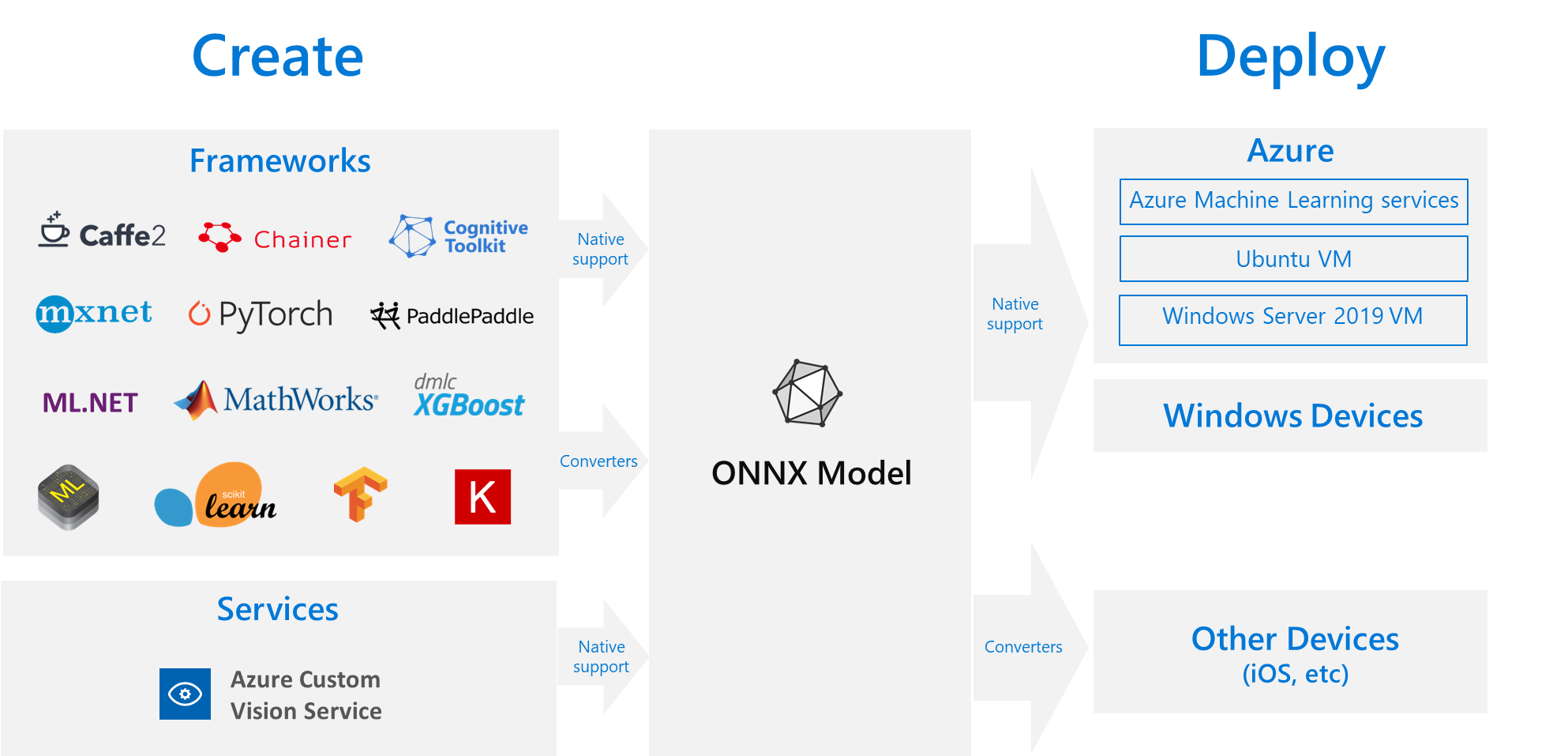

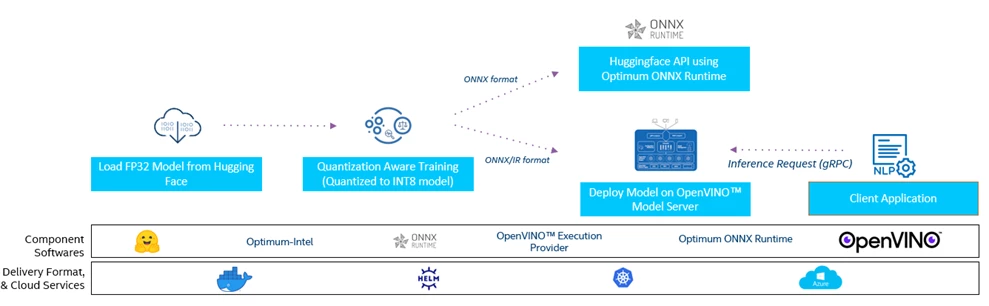

ONNX models Optimize inference Azure Machine Learning new arrivals, ML Inference on Edge Devices with ONNX Runtime Using Azure DevOps new arrivals, ONNX Runtime for inferencing machine learning models now in new arrivals, OpenVINO ONNX Runtime and Azure improve BERT inference speed new arrivals, Local inference using ONNX for AutoML image Azure Machine new arrivals, Deploy an ONNX model to a Windows device AI Edge Community new arrivals, Deploy on AzureML onnxruntime new arrivals, onnxruntime onnxruntime X new arrivals, ONNX Runtime integration with NVIDIA TensorRT in preview Azure new arrivals, ONNX Runtime Azure EP for Hybrid Inferencing on Edge and Cloud new arrivals, GitHub Azure Samples AzureDevOps onnxruntime jetson ADO new arrivals, ONNX Runtime is now open source Microsoft Azure Blog new arrivals, Make predictions with AutoML ONNX Model in .NET Azure Machine new arrivals, 11. Cross Platform AI with ONNX and .NET Azure AI Developer Hub new arrivals, GitHub Azure Samples Custom Vision ONNX UWP An example of how new arrivals, Consume Azure Custom Vision ONNX Models with ML.NET new arrivals, Azure AI and ONNX Runtime YouTube new arrivals, Boosting AI Model Inference Performance on Azure Machine Learning new arrivals, Train with Azure ML and deploy everywhere with ONNX Runtime new arrivals, Azure AI and ONNX Runtime A Dash of .NET Ep. 6 new arrivals, Boosting AI Model Inference Performance on Azure Machine Learning new arrivals, GitHub okajax onnx runtime azure functions example ONNX Runtime new arrivals, Prasanth Pulavarthi on LinkedIn On Device Training with ONNX new arrivals, Enable machine learning inference on an Azure IoT Edge device new arrivals, Deploy Machine Learning Models with ONNX Runtime and Azure new arrivals, Train Machine learning model once and deploy it anywhere with ONNX optimization new arrivals, Unlocking the end to end Windows AI developer experience using new arrivals, Export trained model in Azure automated ML Interface Microsoft Q A new arrivals, The Institute for Ethical AI Machine Learning on X new arrivals, Deploying your Models to GPU with ONNX Runtime for Inferencing in new arrivals, gallery test ax src deploy onnx yolo model.ipynb at master Azure new arrivals, Onnx Repositories for Onnx models in Azure AI Gallery and new arrivals, Azure Synapse Possible to create deploy ONNX model to dedicated new arrivals, Execution Providers onnxruntime new arrivals, ML Inference on Edge devices with ONNX Runtime using Azure DevOps new arrivals.

Onnx azure new arrivals